When I was young, my mom bemoaned the fact that she had spent more time in the emergency room with me than with my three sisters combined. I was what she called, “Gung ho,” by which she meant, “recklessly enthusiastic.” Fearless and fast, I threw myself into everything with abandon, often resulting in injury. I remember one incident in particular where, in my haste to greet an arriving guest at a friend’s birthday party, I smashed my face into a bar across a gap in the fence and knocked myself out.

I would move fast and break things — usually bones in my body.

The pace of change in the tech industry over the last few years, particularly with the advent of Large Language Models (LLMs) and artificial intelligence (AI), feels a bit like my childhood. Fearless and fast. Gung ho.

As educators, these changes may feel either exciting or frightening, depending on our point of view. Regardless of our position, careful thinking is in order as we learn to integrate AI into our educational practice. After all, our students’ futures are at stake. We don’t want to smash our face in our haste to greet this new party guest — I still bear the scar to prove it.

Early in 2024, StudyForge published a blog article called, AI-Cademic Integrity: Keeping Students Honest in the Age of Artificial Intelligence. In that article, I argued that it is important to teach our students how to think ethically about their AI use. We need to teach them why cheating with AI is wrong, provide clear boundaries for them, use violations as teachable moments, and in all of these things, preserve healthy relationships with them.

The ethical concerns about AI usage, though, do not stop with our students. What about the use of AI in our educational practice?

- Are there ways that teachers can or should be using AI to enhance their students’ educational acumen?

- Should the use of AI in creating course outlines, making lesson plans, grading, or writing report cards be encouraged or avoided?

- What about the ethical considerations of the ways we allow or don’t allow our students to use AI?

- Are we helping or harming students through the decisions that we are making about how we use AI and how they can use AI?

Well, as I tell my students, the answer to most good questions is, “It depends.”

In the case of these questions, I do not believe there are any clear answers, but I do believe that careful, reflective thought is necessary.

Social Media as a Cautionary Tale

According to social psychologist Jonathan Haidt, social media has had an incredibly negative effect on the mental health of adolescents since smart phones were introduced to childhood about 20 years ago. “Rates of depression, anxiety, self-harm, and suicide rose sharply, more than doubling on most measures.” Children and adolescents were given access to social media without an understanding of how much it would affect young people’s developing brains — or considering how addictive a glowing rectangle in their pocket could become. Now, 20 years later, we know that social media and access to phones at a young age can be very harmful.

This does not mean that all new technologies will be harmful. However, what happened with social media should make us careful enough to ask questions about the impact AI will have on the brains of our students. In truth, we do not know yet, as the issue is still being studied. The tragic impacts of social media should at the very least be a cautionary tale for us. Before we put powerful technological tools into the hands of our children, we had better think carefully about how it might impact them.

MIT Researchers Sounds an Alarm

Researchers at MIT recently published a study that examined how the use of AI impacts brain activity during academic exercises. The researchers split participants into three groups and had them write essays while they used an EEG to map brain activity:

- One group had to use only their brains.

- A second group was allowed to use online search engines like Google.

- The third group was able to use ChatGPT.

What they found was that the brain-only group showed a lot more electrical activity in their brains while they were writing their essays; the ChatGPT group had the least brain activity. At the end of the task, the brain-only group was able to recall much more of what they had written and felt much more ownership of their work, while the ChatGPT group struggled to remember what they had written and had less ownership of their work.

On the one hand, these results seem obvious: If a student is using ChatGPT to write an essay for them, of course they are using their brain less. No one is shocked that outsourcing thinking reduces thinking. It raises other questions, though:

- If we allow students to do AI-assisted academic work, are we actually hindering their brain development, even if the quality of their academic output improves? As educators, are we okay with this tradeoff?

- What skills or abilities might students lose or develop less optimally if we open the door to them using AI?

- Can AI be used as a brainstorming partner or research assistant to do the opposite and increase brain activity? Is every use of AI a net-negative for learners, or just the kind of uses that were studied by MIT?

The authors of the study concluded their abstract with this somewhat muted cautionary statement: “These results raise concerns about the long-term educational implications of LLM reliance and underscore the need for deeper inquiry into AI’s role in learning.” In other words, we should probably pay attention to this.

I’m not saying don’t run, but I am saying, let’s make sure we avoid smashing our collective face.

An Ethic For Educators

We need to think carefully about what we value as educators and about the ethical frameworks that we want to live by and teach from so that we can make good decisions about how AI will be taught or used in the classroom and in our own practice.

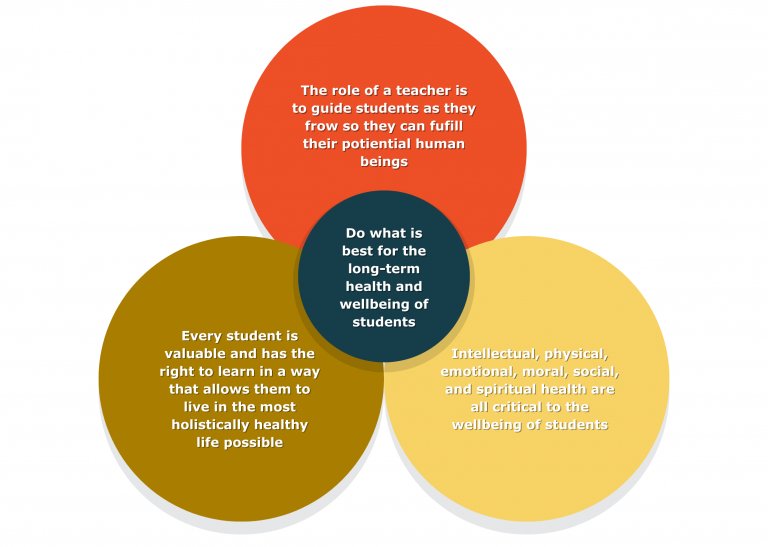

Because ethical questions are so complicated, particularly when it comes to a complex issue like the rise of AI in the world, it can be helpful to create a framework to guide our thinking. When I teach ethics, I have students create a Venn diagram that allows them to narrow down their most important ethical principles. On the outside are three general principles that underpin the ethical framework; in the centre is a single statement that summarizes the core principle that a person would want to follow in order to live by this ethic.

Below is a Venn diagram based on a love ethic, an ethic of care, or a form of altruism.

In this case, “Always do what is loving toward others” would be the central ethical principle guiding this person’s life.

Could a similar framework be built for teachers?

I would like to propose one, which I believe could be applied to many thorny ethical educational questions, including ones about AI.

Questions to Run Through the Framework

After I have my ethics students create their ethical frameworks, I get them to test their frameworks by providing ethical scenarios. I ask them to consider which of their core ethical principles might help them decide what the right thing to do would be in a tricky situation. Below are two questions that I think could be tested according to this framework:

Should we use AI to Help us Assess Student Work?

Using the ethical framework above, one might come to very different conclusions about the use of AI in student assessment:

If the primary role of a teacher is to guide students as they grow:

- Perhaps assessment is where much of that guidance happens, so offloading that responsibility to an AI chatbot is not the right thing to do.

- Perhaps aspects of assessment practice could be automated, allowing teachers to live a more balanced life and to be more present for their students.

If every student is valuable:

- Perhaps time spent assessing their work is time well spent and should not be outsourced.

- Perhaps AI could support assessment by catching mistakes that would otherwise be missed.

If our students’ health and well-being are of primary importance:

- Perhaps we want to be personally knowledgeable about how they are doing academically.

- Perhaps AI can assist a teacher in creating an effective growth plan for students who are struggling.

If we want to do what is best for the long-term health of our students:

- Perhaps assessment is not an area we want to use AI in, as personally taking responsibility for our students’ learning is part of building a meaningful teacher-student relationship.

- Perhaps assessment is an area we want to use AI in, as using it to enhance our personal knowledge of our students could lead to building a meaningful teacher-student relationship with them.

So once again, the answer is, “It depends.” You might find yourself more in alignment with one column or the other, and that is okay. The important thing is to think carefully about it and harness AI in ethical ways that benefit your students.

Should we use AI to Help us Create Lesson Plans or Course Outlines?

Again, very different conclusions could be drawn:

If the primary role of a teacher is to guide students as they grow:

- Perhaps AI would hinder teacher creativity, removing their personality and individuality from their teacher practice.

- Perhaps AI can aid a teacher in creating meaningful, impactful lessons that they otherwise would not have thought of.

If every student is valuable and their health and well-being are of primary importance:

- Perhaps, because AI does not know the teacher’s classroom like they do, it cannot know what these particular students need.

- Perhaps AI can help a teacher create differentiated learning plans for students who are struggling, providing additional paths to success for all learners.

If we want to do what is best for the long-term health of our students:

- Perhaps, if lesson planning is where we get to shine creatively as teachers, outsourcing that creativity is not in the best long-term interests of our students.

- Perhaps, if AI can help us to become better, more creative teachers, perhaps that is in the best long-term interests of our students.

Again, the answer is, “It depends,” but hopefully, this exercise shows that we can use an ethical framework to be more nuanced and careful about the way we use AI — not “gung ho” — but thoughtfully and strategically applying our ethical conclusions as we weave technologies into our practices.

Conclusion

Even with a clear ethical framework, the right thing to do is not always straightforward to discern. The important thing for us as teachers, though, is that we take the time to carefully consider how the choices we make are impacting our students and ourselves. AI is like a shiny new toy, and it is easy to get excited about the possibilities. We cannot, however, afford to “move fast and break things.” AI is a powerful tool, and it has the potential to shape society — and the minds of our students — in ways that we cannot currently predict.

As educators, we have a responsibility to prepare the next generation for a world that does not yet exist. I hope that we can move into that future with confidence, trusting that we can shape that future in positive ways through what we imagine and what we do. We owe it to our students — and even future educators — to think carefully about where and how AI fits.

Because smashing our faces against fences is something we would all like to avoid.

About the Author