I was a big fan of Star Trek: The Next Generation when I was growing up. For some readers, this admission might diminish my credibility in your eyes. I’ll take that risk. For others, you may be wondering how many Star Trek conventions I’ve been to or what my favorite episode is. Answers: Zero, and Season 3, Episode 26.

One of the things that fascinated me about this show as a teenager, and that continues to fascinate me, is the way the show wrestles with important moral and ethical issues that are enduringly relevant. One theme that is explored throughout the series is the interaction of humans and technology. With the recent explosion of artificial intelligence technology, some of these questions are becoming increasingly important:

- What is the essential difference between human beings and human-created technology? (S2, E9)

- What could happen if technologies we created got out of our control? (S2, E3)

- What are the ethical implications of creating human-like technologies? (S3, E16)

As an online teacher, these larger ethical questions about technology might seem a little academic when, in reality, I just need to make sure my students are not cheating by getting ChatGPT to write their essays for them.

I get it. I’m there too. There are definitely days when it would be enough to figure out how to stop my students from trying to sneak AI-generated journal entries to me. However, I am convinced that the questions of how technology impacts our humanity are still important to ask, because our responses to those questions will impact how we respond to the day-to-day ethical questions of how to help students learn to use technology responsibly.

The Wild West of the Spring of 2023

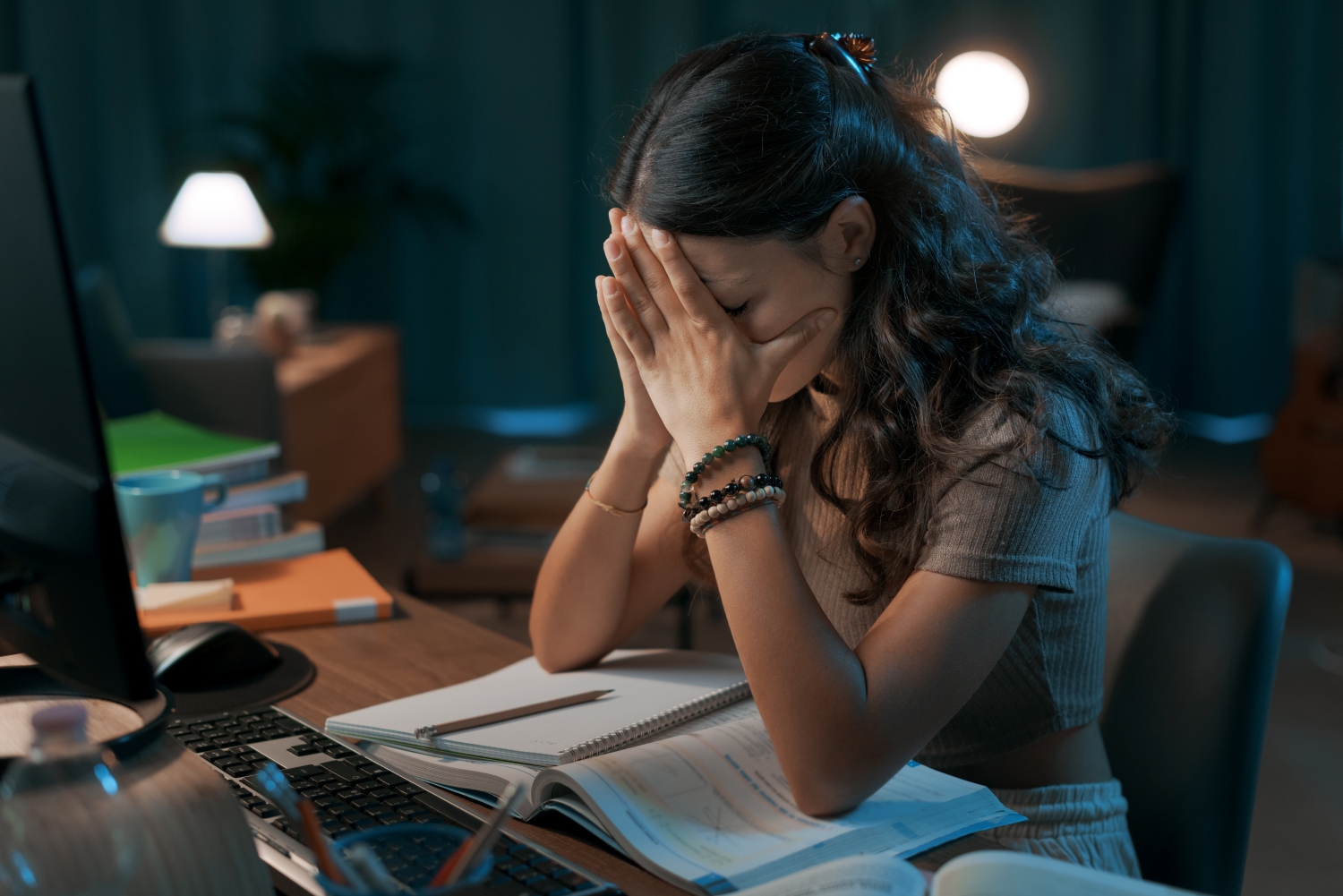

The first few months after ChatGPT’s public release felt like the Wild West for me as an online teacher. I teach online Political Studies, Literary Studies, and Social Studies, and I was stunned at how many of my students were trying to hand in work that was clearly computer-generated.

I had dozens of students whose writing all of a sudden went from barely readable to grammatically perfect in the space of a week; I had other students who would typically hand in thirty words in smaller activities handing in 250 to 300 perfectly polished words. It was so brazenly obvious, and, at first, I did not know how to respond. I was spending about a third of my teaching and marking time trying to keep track of it all.

At that time, our school did not have a clear policy about AI. It was too new. All of us, administration and teachers alike, were just trying to figure out how to navigate this new world. Students were trying to figure out how to navigate it too. I think some of them probably recognized that what they were doing was wrong, but others, I am almost certain, genuinely thought that what they were doing was okay.

For a while, AI-detection software was helpful, as I could simply paste student work into one of the detectors and have all the potential AI-created writing highlighted. However, I had students who managed to get around that, with one student, we later found out, typing the prompt, “Write this as if I was a Grade 8 student.” There were so many mistakes in this assignment, if she had not been caught using AI in another course, we might have missed it entirely.

This is why I am convinced that if we are going to encourage our students to be honest, we are going to need them to think about the larger ethical implications of AI use. We need to frame these questions as moral questions, not just give students rules, try to catch them, and punish them if they step out of line. In other words, students need to learn how to reason ethically, because just like anything else, that is the only way they are going to learn how to behave ethically.

Punishment-reward ethical training only gets you so far.

Framing the Ethical Questions

The fact is, AI technology is developing faster than any of us are going to be able to keep up with. Detection software is unreliable, and the risk of making a false accusation against our students is high. We need a relationship-based approach to keeping our students honest in the new reality of AI.

1.) Students need to know why getting AI to write their work is wrong

Through extensive conversations with my colleagues, we determined that we could probably prevent a significant amount of unethical AI use by just teaching the students why handing in an AI-generated essay or journal entry is wrong. We created a series of videos that teachers can put at the beginning of their courses, including two that show how using AI to write your homework is the moral equivalent of getting your older sibling to write your homework.

For most students, watching these videos will probably be enough for them to recognize why cheating with AI is morally wrong, and as a side benefit, they may even recognize that taking shortcuts to academic success will actually not benefit them in the long run.

2.) Students need to know where the line is

There are different opinions in the educational world about how to cope with the reality of a world with large-language models. Should we, as teachers, be teaching our students how to use AI so that they will use it well? Or, should we be teaching our students not to use it at all because they need to develop their overall academic and thinking skills before adding AI into the mix?

There is no time to answer these questions in this blog, but there is time to say this: You need to figure out where you stand on that issue and let your students know what they are allowed to do and what they are not allowed to do in your course.

Here is the third video in our series on AI use, which dives into some of the bigger questions of how AI could possibly be used in an ethically responsible way.

Notice that the video is open-ended, not really saying that one approach as a teacher is better than another. I know that my own approach is different from some of my colleagues’ approaches. Chances are, unless your school has created a blanket policy for all teachers, your colleagues will have a variety of approaches. Maybe that is a good thing, especially as we are all, as a species, trying to figure our way through this.

3.) When violations happen, use them as teachable moments

It is likely, even if you tell students where the line is, some of them are still going to cross that line. The pressure on our students these days is intense, and for some, the AI shortcut is just too tempting. I try to approach each instance as an opportunity to connect, engage, build a relationship, and be a teacher. Emails might sound something like this:

I noticed that your recent assignment has a different writer’s voice than what I’m used to from you. I am wondering if you could tell me a little bit more about your writing process. Were there any online grammar tools or resources that you used that you might have forgotten to cite? I’d like to set up a meeting with you so we can have a conversation about this.

I might also say something like this:

I ran your assignment through some AI-detection software and got some mixed results. These programs are not 100% accurate, so I do not want to falsely accuse you, but I am wondering if you could help me figure out what might have happened here.

The goal is not to put students on the defensive, but to invite a further conversation. In the follow-up meeting, students may or may not admit to what they have done, and you might never actually know with 100% certainty what happened. However, if you preserve the relationship and build rapport, hopefully it will still be a learning experience for them.

Not only this, but a better time to have these kinds of conversations is during low-stakes assignments in high school, rather than a high-stakes exam or thesis in post-secondary school.

Bigger Questions of Technology and AI

I have a lot of concerns about where the world of AI is going to take us in the future:

- I am concerned that some of the most fundamental human skills we have — thinking, reasoning, creating, and communicating — could be hindered in the future if we let computers do too much of that work for us.

- I am concerned about bad actors in the world using AI to increase political polarization, misinformation, and confusion about basic facts, leading to additional murkiness around what is true and what is not in the world.

But, I’m less concerned about students cheating with AI than I was back in the spring of 2023, as I have seen a serious decrease in the number of suspicious assignments. Perhaps it is because I have clearly told every new student the AI guidelines in my course. Perhaps it is because any student who might have tried cheating with AI has already been caught at least once, so they are no longer using it.

Regardless, AI is now part of our world, and just like we, as teachers, needed to teach our students to use Google responsibly, we are now going to need to teach them to use generative AI responsibly. As we do so, let’s make sure to do it in a way that preserves our relationships, teaches moral reasoning, and prepares them adequately for an unknown future.

Back to Star Trek, TNG

There is an intriguing and somewhat creepy episode of Star Trek: The Next Generation, in which the Enterprise gets stuck in space. The ship’s chief engineer, Geordi La Forge, brainstorms a solution to their conundrum with the help of a holographic recreation of a brilliant engineer. While he works with this hologram, he develops feelings for her (creepy), but also is able to come up with a creative solution to free the Enterprise from its conundrum (intriguing). Perhaps this is a picture of what AI could be for our students at its best — an opportunity to brainstorm and enhance human creativity and ingenuity.

La Forge, though, learned a hard lesson — falling in love with technology is fraught with difficulties. While we are teaching our students to use technology well, let’s not forget that in education, creativity, and living out our best lives as human beings, face-to-face, human-to-human relationships are vital.

About the Author

Brian Oger, B.A., MDiv

Director of StudyForge

Are you a StudyForge teacher and want to add AI resource videos to your course?